3D Rasterization - Unifying Rasterization and Ray Casting

C. Dachsbacher, P. Slusallek, T. Davidovic, T. Engelhart, M. Philipps and I. Georgiev

Technical report of VISUS/Saarland University, 2009.

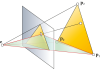

Abstract: Ray tracing and rasterization have long been considered as two very different approaches to rendering images of 3D scenes that – while computing the same results for primary rays – lie at opposite ends of a spectrum. While rasterization first projects every triangle onto the image plane and enumerates all covered pixels in 2D, ray tracing operates in 3D by generating rays through every pixel and then finding the first intersection with a triangle. In this paper we show that, by making a slight change that extends triangle edge functions to operate in 3D instead of 2D, the two approaches become almost identical with respect to primary rays, resulting in an efficient rasterization technique. We then use this similarity to transfer rendering concepts between the two domains. We generalize rasterization to arbitrary non-planar perspectives as known from ray tracing, while keeping all benefits from rasterization. In the reverse we transfer the concepts of rendering consistency, which have not been available for ray tracing thus far. We then demonstrate that the only remaining difference between rasterization and ray tracing of primary rays is scene traversal. We discuss a number of approaches from the continuum made accessible by 3D rasterization.